Why Your Healthcare AI Needs a Heart (Not Just an Algorithm)

Let’s talk about something that keeps healthcare executives up at night: how do you make artificial intelligence feel less, well, artificial? You’ve probably experienced it yourself—that moment when you’re trying to schedule a doctor’s appointment through an automated system, and you find yourself yelling “REPRESENTATIVE!” at your phone like it’s personally wronged you. We’ve all been there, haven’t we?

Here’s the thing: healthcare AI is extraordinary at what it does. It can diagnose diseases from medical images with startling accuracy, predict patient deterioration before human clinicians spot the signs, and process millions of data points in seconds. But here’s what it often can’t do—make your grandmother feel heard when she’s anxious about her test results. And that’s exactly the problem we need to solve.

The healthcare industry stands at a fascinating crossroads. On one side, we have the immense promise of AI to revolutionize patient care, reduce costs, and save lives. On the other, we have the fundamental human need for empathy, connection, and understanding during our most vulnerable moments. Balancing these isn’t just nice to have—it’s absolutely essential for the future of healthcare technology.

Think of it this way: automation without empathy is like a surgeon with steady hands but no bedside manner. Technically proficient? Absolutely. Would you want them to operate on your loved ones? Maybe not. The magic happens when we bring these two forces together, creating healthcare experiences that are both efficient and deeply human.

The Real Cost of Cold Technology in Healthcare

Remember the last time you felt genuinely cared for by a healthcare provider? Chances are, it wasn’t because they rattled off your lab values with computer-like precision. It was probably because they looked you in the eye, listened to your concerns, and treated you like a person rather than a patient ID number. That’s what we’re talking about when we discuss empathy in healthcare—and it’s what’s often missing from our rush toward automation.

The statistics paint a sobering picture. Studies show that patients who feel their healthcare providers demonstrate empathy have better health outcomes, higher satisfaction scores, and greater adherence to treatment plans. Yet as we introduce more AI into the patient experience, we risk creating a technological barrier between caregivers and those they serve. It’s like putting up a glass wall—you can see through it, but you can’t truly connect.

Consider the typical patient journey today. Sarah, a 52-year-old woman, notices concerning symptoms and turns to her healthcare system’s AI-powered symptom checker. The chatbot efficiently asks relevant questions and suggests she schedule an appointment. So far, so good. But when Sarah tries to explain that she’s terrified because her mother died of the same symptoms at her age, the bot offers a generic “I understand” and moves on to scheduling. Does it really understand? Can it?

This is where the empathy gap becomes a chasm. Healthcare isn’t just about diagnosing and treating conditions—it’s about addressing the fears, anxieties, and hopes that come with illness. When we design AI systems without considering these emotional dimensions, we’re essentially building half a solution. We’re creating tools that can tell patients what’s wrong but can’t comfort them when they’re scared.

The business case for empathy is compelling too. Healthcare organizations that prioritize patient experience see reduced complaint rates, fewer malpractice claims, and better online reviews. In an era where patients have choices about where they receive care, the human touch becomes a competitive advantage. Yet many healthcare AI implementations focus solely on efficiency metrics—appointment booking rates, diagnostic accuracy, and processing speed—while completely overlooking how these interactions make people feel.

Here’s a question worth pondering: if your AI can reduce appointment scheduling time by 40% but increases patient anxiety by 30%, have you actually improved anything? The answer isn’t as straightforward as the data might suggest.

Building Bridges: Design Principles for Empathetic Healthcare AI

So how do we actually build healthcare AI that feels human? It starts with fundamentally rethinking how we approach UX design in this space. We need to stop treating empathy as a nice-to-have feature and start seeing it as a core functional requirement, just like security or accuracy.

The first principle is simple but profound: design for emotional states, not just tasks. Traditional UX design maps out user journeys based on what people want to accomplish—book an appointment, refill a prescription, view test results. But empathetic UX design goes deeper, asking: what is this person feeling at each stage of their journey? A patient checking test results might be anxious, scared, or hopeful. Your interface should acknowledge and respond to these emotional realities.

Think about conversational AI, for instance. Instead of programming chatbots to simply extract information and provide responses, we should design them to recognize and respond to emotional cues. When a patient types, “I’m really worried about this,” the system should do more than acknowledge the statement and move on. It might offer reassurance, provide relevant educational resources, or—and here’s the crucial part—know when to seamlessly connect the patient with a human professional.

Another vital principle is transparency with humanity. Patients deserve to know when they’re interacting with AI, but that disclosure shouldn’t feel cold or robotic. Instead of “You are now chatting with an automated assistant,” imagine something like, “I’m an AI assistant here to help you 24/7, but I can connect you with a team member anytime you’d prefer to speak with a person.” See the difference? The second approach acknowledges the AI’s limitations while emphasizing patient choice and access to human support.

Personalization plays an enormous role here too, but not the creepy kind that makes people wonder what data you’re collecting about them. We’re talking about AI that remembers context and treats patients as individuals. If someone has expressed anxiety about needles in the past, the system should flag this when scheduling a blood draw and proactively offer stress-reduction techniques or alert the phlebotomy team. This isn’t complicated technology—it’s thoughtful design that puts patient needs first.

The interface itself should feel warm and approachable. This means carefully considering every design element—color palettes that feel calming rather than clinical, language that’s conversational without being unprofessional, and visual elements that feel human-created rather than algorithmically generated. Even the timing of responses matters. An instant reply might seem efficient, but sometimes a brief pause makes the interaction feel more natural, more like a real conversation.

We also need to embrace multimodal interactions. Not everyone wants to type their healthcare concerns into a chatbot at 2 AM. Some people prefer voice interactions, others want video calls, and many still appreciate the option to pick up the phone and talk to an actual human being. Your AI-powered healthcare UX should gracefully support all these preferences without making patients feel like they’re choosing the “difficult” option by wanting human contact.

The Human-AI Partnership: When to Automate and When to Involve People

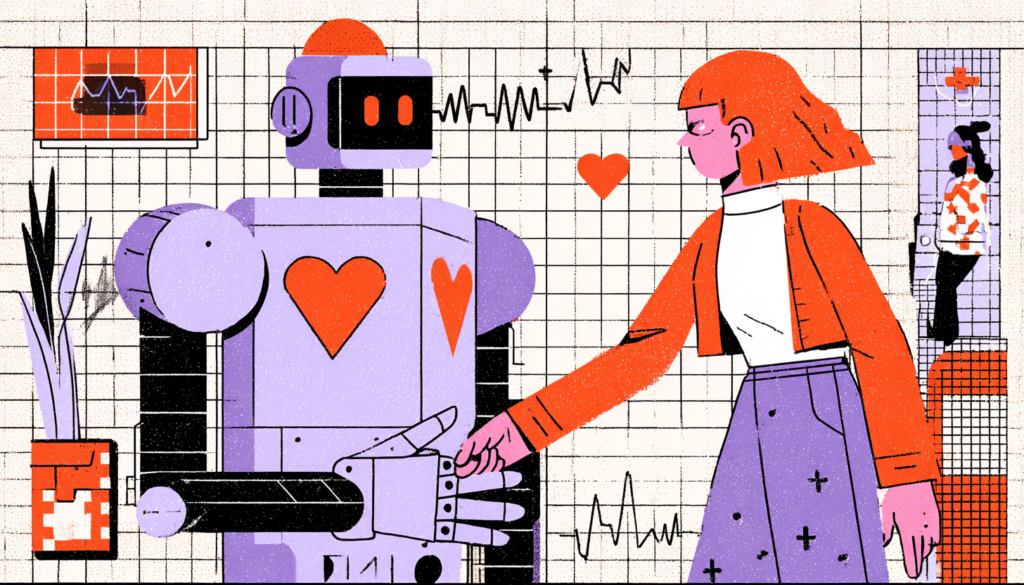

Here’s where things get really interesting—and where many healthcare organizations stumble. The question isn’t whether to use AI or humans. It’s about orchestrating a seamless partnership between the two, ensuring each handles what they do best.

AI excels at consistency, scalability, and processing vast amounts of information. It never gets tired, never has a bad day, and can serve thousands of patients simultaneously. But AI lacks genuine emotional intelligence, can’t adapt to truly novel situations, and sometimes misses the subtle cues that any experienced healthcare professional would catch immediately. Humans, on the other hand, bring empathy, intuition, contextual understanding, and the ability to make judgment calls in ambiguous situations.

The sweet spot lies in designing systems where AI handles the routine and redirects the complex or emotional to human professionals. Imagine it like a dance where both partners know their roles. AI can manage appointment scheduling, medication reminders, basic symptom checking, and routine follow-ups. But when a patient expresses distress or confusion or has complex questions, the system should seamlessly transition them to a human professional—and do so in a way that feels natural rather than like they’re being “escalated” because they’re difficult.

This handoff is where many systems fail spectacularly. You’ve probably experienced it—being transferred from an automated system to a human who has zero context about what you’ve already shared, forcing you to repeat everything. Maddening, right? Empathetic UX design ensures that when transitions happen, the human professional receives complete context, so patients never have to start their story over.

Consider medication management as an example. An AI system can perfectly handle routine prescription refills, sending automated reminders when it’s time to reorder and flagging potential drug interactions. But when a patient reports side effects or wants to discuss stopping their medication, that conversation needs human involvement—immediately and without friction. The AI’s role becomes supportive: gathering initial information, scheduling a prompt consultation, and ensuring the clinical team has all relevant data before the conversation begins.

Some healthcare organizations are implementing what I call “collaborative intelligence” models. In these systems, AI works alongside human professionals in real-time, analyzing patient interactions and providing clinicians with insights, suggestions, and relevant information without replacing the human element entirely. A nurse video-calling a patient might have AI assistance that flags potential concerns based on the conversation, suggests questions to ask, or pulls up relevant medical history—all invisible to the patient, who simply experiences an exceptionally well-informed and attentive healthcare provider.

The key is maintaining what researchers call “meaningful human control.” Patients should always have the option to speak with a person, and that option should be obvious and easy to access—not buried three menus deep or only available during limited hours. Moreover, human professionals should have the ability to override AI recommendations when their clinical judgment suggests a different approach. Technology should augment human decision-making, not replace it.

We also need to consider the training implications. Healthcare professionals working with AI-enhanced systems need education not just on how the technology works, but on how to maintain empathetic patient relationships within these new workflows. How do you balance looking at an AI-generated clinical summary with maintaining eye contact with your patient? How do you explain AI-assisted diagnoses in ways that build trust rather than confusion? These human skills become even more critical as automation increases.

Measuring Success: Beyond Efficiency Metrics

If you can’t measure it, you can’t improve it—that old management adage holds true for empathetic UX in healthcare AI. But here’s the challenge: we’re really good at measuring the wrong things. We track appointment booking conversion rates, average handling times, and system uptime. All important, sure. But are we measuring whether patients feel cared for? Whether they trust the system? Whether their anxiety decreased or increased after the interaction?

Traditional healthcare metrics and patient satisfaction scores give us some insight, but they often miss the nuances of how AI impacts the patient experience. We need new frameworks that capture both the efficiency gains and the human impact of our technology implementations. Think of it as a dual-lens approach—one lens focused on operational excellence, the other on emotional resonance.

Start with sentiment analysis, but go beyond simple positive/negative categorizations. Use natural language processing to understand the emotional journey within individual interactions. Did the patient start anxious and end reassured? Did confusion turn to clarity? These emotional trajectories tell you more about your UX effectiveness than raw satisfaction scores ever could.

Patient effort scores offer valuable insights too. How hard did someone have to work to accomplish their goal? Did they have to repeat information multiple times? Did they get stuck in automated loops? Lower effort correlates strongly with satisfaction, but in healthcare, we also need to consider emotional effort—how much stress or anxiety did the interaction create?

Qualitative feedback becomes invaluable here. Regularly conduct user testing sessions with actual patients, not just healthy employees who pretend to be patients. Watch them interact with your AI systems in real-time. Listen to their frustrations, their confusion, and their moments of delight. These sessions will reveal problems that no amount of quantitative data can uncover.

Don’t forget to measure the human side of the equation too. How do your clinical staff feel about the AI tools they’re using? Are these systems making their jobs easier or more frustrating? Are they able to spend more time on meaningful patient interactions, or are they now managing technology instead of caring for people? Staff satisfaction directly impacts patient experience, so these metrics matter enormously.

Consider implementing ongoing feedback loops where patients can quickly rate specific interactions immediately after they occur. A simple “How did we do?” prompt after a chatbot conversation or automated appointment scheduling provides real-time data about UX effectiveness. But make sure you’re actually using this feedback to iterate and improve, not just collecting it to feel good about asking.

Look at behavioral indicators too. Are patients choosing to bypass automated systems and reach out to your contact center instead? This is a warning sign that your AI UX isn’t fulfilling their requirements. Are certain patient demographics opting out of digital tools entirely? That might indicate accessibility issues or trust gaps that need addressing.

Creating Healthcare AI That Actually Cares

We’re standing at the beginning of something transformative. Healthcare AI will continue advancing at a breathtaking pace, offering capabilities we can barely imagine today. But the organizations that win—that truly revolutionize healthcare delivery—won’t be those with the most sophisticated algorithms. They’ll be the ones who figure out how to make those algorithms feel human.

This requires a fundamental shift in how we think about healthcare technology development. We need designers who understand psychology as well as they understand pixels. We need engineers who consider emotional impact alongside performance optimization. We need healthcare leaders who recognize that empathy isn’t soft or secondary—it’s a critical component of clinical effectiveness.

The future of healthcare UX lies in invisible intelligence—AI so well-integrated and thoughtfully designed that patients barely notice they’re interacting with automation. They just experience care that feels personalized, responsive, and genuinely concerned with their well-being. The technology fades into the background while the human connection moves to the foreground.

This isn’t about resisting automation or romanticizing the past. Healthcare has real problems that AI can help solve: access issues, cost concerns, clinician burnout, and diagnostic errors. But solving these problems without losing our humanity is the real challenge—and the real opportunity.

As you design or implement healthcare AI in your organization, keep asking yourself: would I want my family members to interact with this system when they’re sick or scared? Would I trust it with my most vulnerable moments? If the answer isn’t an enthusiastic yes, you’ve got more work to do.

The balance between automation and empathy isn’t a problem to solve—it’s a tension to manage, constantly and thoughtfully. It requires ongoing attention, iteration, and a genuine commitment to putting patient experience at the center of every technology decision. If you do it right, you’ll make healthcare more efficient and humane.

Ultimately, healthcare is fundamentally about individuals assisting others during challenging times. AI should amplify that human mission, not replace it. When we design with that truth at the center of everything we build, we create technology that doesn’t just work—it cares.